Send Us a Message

Contact Information

Skizzle Technolabs India Pvt. Ltd.

Noel Focus, Kakkanad,

Kerala, India – 682021

A growing number of businesses are using software solutions that harness Big Data, Machine Learning, and cloud-native applications. This complicated and demanding element of software development involves knowledgeable, versatile individuals and the usage of scalability and resilience tools.

Apache Kafka is one of such tools. When broker technologies based on other standards have failed, Kafka allows you to take up the challenge posed by Big Data.

In recent years, a large number of companies have taken over Apache Kafka. Some say it is one of the world’s most popular tools. Kafka has a few applications from easy message transmission via inter-services communication within microservice architecture to full-scale platform applications.

What is Kafka? How do businesses benefit from its implementation? What to think of before introducing Kafka in your organization? Let’s dive into the simple guide to Apache Kafka.

Kafka originated around 2008. Jay Kreps, Neha Narkhede, and Jun Rao who at that time worked at LinkedIn were their authors. It was a fierce open-source project, now commercialized by Confluent, and used as fundamental infrastructure by thousands of companies, ranging from Airbnb to Netflix.

“Our idea was that instead of focusing on holding piles of data like our relational databases, key-value stores, search indexes, or caches, we would focus on treating data as a continually evolving and ever-growing stream, and build a data system — and indeed a data architecture — oriented around that idea” Jay Kreps — Confluent Co-Founder

So, Kafka’s aim was to overcome the problem with continuous streams of data, as there was no solution at that moment that would handle such data flow.

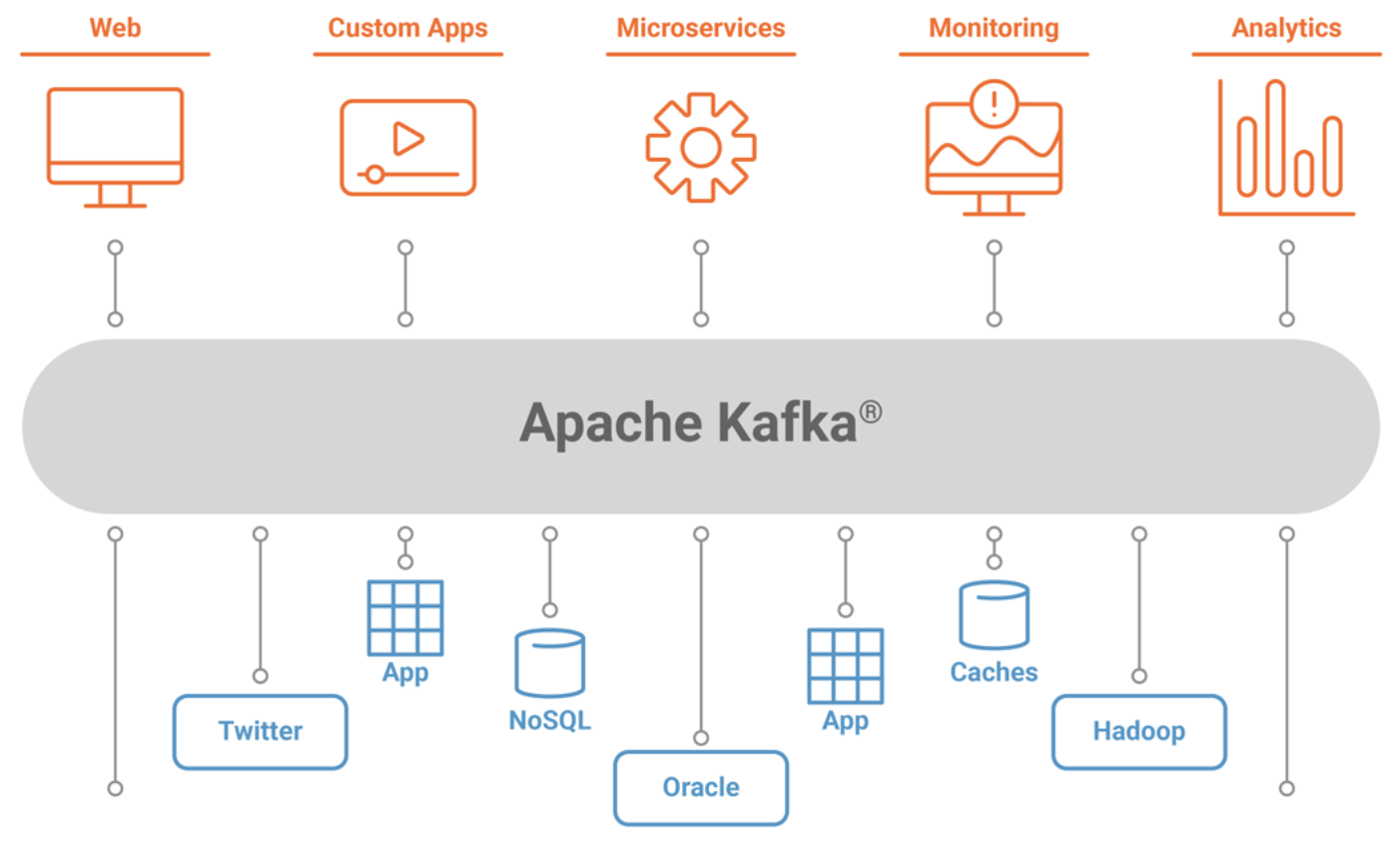

By definition Apache Kafka is a distributed streaming platform for building real-time data pipelines and real-time streaming applications. How does it work? Like a publish-subscribe system that can deliver in-order, persistent messages in a scalable way.

In distributed systems, Kafka allows you to send messages between applications. The sender can send messages to Kafka, while the recipient gets messages from the stream published by Kafka.

Messages are grouped into topics — a primary Kafka’s abstraction. The sender (producer) sends messages on a specific topic. The recipient (consumer) receives all messages on a specific topic from many senders. Any message from a given topic sent by any sender will go to every recipient who is listening to that topic.

Kafka is run as a cluster comprised of one or more servers each of which is called a broker and communication between the clients and the servers are done with a simple, high-performance, language agnostic TCP protocol.

Source – https://docs.confluent.io/

Kafka is continuously being used in many different parts of the industry. The technology fits perfectly into the developing dynamic data-driven marketplaces where enormous amounts of generated records need to be processed as they occur.

An immense volume of information arriving at high velocity and high costs of data storage push executives to look for cost-effective, resilient, and scalable real-time data processing solutions. Why? Because it’s all about data…and the customer.

For modern enterprises, data is vital for their success. Companies wish to detect trends, use information, respond in real-time to client needs. Automation and predictive analytics are no longer reserved for unicorns, and those who react swiftly to market opportunities benefit from the data-driven opponents.

Kafka is currently the second-largest Apache project used and accessed by around 100 000 businesses worldwide.

Like any other message system, Kafka allows the asynchronous transmission of data between processes, apps, and servers and allows up to trillion events a day to be processed. In order to reach the required performance, and Big Data solution can profit from its particular system in real-time. Kafka helps you to reliably consume and swiftly transfer massive quantities of data and is a very flexible solution for connecting loosely integrated IT system elements.

Apache Kafka employs Kafka Streams, a client library for the development of microservices & applications. It’s a big data technology that allows you to process data in motion and identify what works, what doesn’t work quickly. The conventional data source – transactional data, including orders, inventory data, and shopping carts – is now enhanced by other data sources: suggestions, interactions with social media, search requests. Adopting stream processing indicates that the period between recording an event and reacting to the system and data application is significantly reduced so that more and more organizations are allowed to proceed in such a way in real-time. All these data are capable of feeding analytical engines and helping organizations to gain clients.

The removal of outdated systems and data silos allows decision-makers to convert their data into practical insights that reach every company function and inform it. The current processing makes it easy to recognize, maintain, and act on the condition of constantly changing data streams from different sources.

Apache Kafka’s scalability is the major sales point for solutions. The distributed systems can be extended and operated as a service more easily. The transition to event-driven microservice architectures allows for agility in applications, not simply from a development and DevOps standpoint but in particular from a business viewpoint.

The IT industry is already there and ready for the mass market. Gone are those days where only stock exchanges, who can and must afford such systems, where trades and other transactions have to be executed with millisecond latency. Cloud providers offer the whole stack, covering mature tools for orchestrating, scaling, monitoring, and tracing — all in an automated fashion.

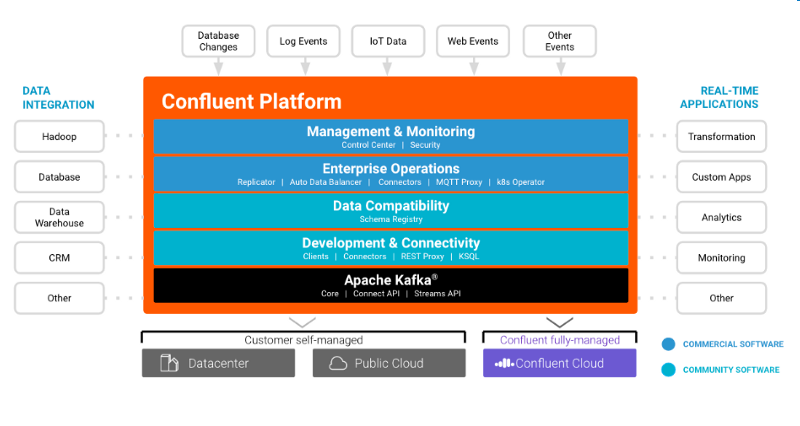

Apache Kafka is loved by software developers and architects as its various software make it a very desirable data integration solution. A clear option to handle the distribution process is being made, because it provides the entire ecosystem of tools to manage data flow quickly and promptly.

Source: https://docs.confluent.io

Kafka is built as a distributed system and can store a large amount of data on the hardware of commodities. It also allows the use of published data sets several times by being a multi-subscription system. No wonder it is one of the best-known tools for microservices architecture. It serves as an intermediate layer of communication and disconnects modules. Scalability is achieved by splitting a communication channel, called topic, into multiple partitions. This enables new instances to start-up to the number of partitions of a certain module.

There is a very active community in Kafka (Confluent and contributors). The versions are updated often, the APIs are changed and new features are added. The Kafka co-founders were all employees of LinkedIn, and Kafka was founded by the Apache Software Foundation as an open-source project in 2011. At that time, Kafka has taken over 1 billion events a day. Intake rates of 7 trillion messages a day have recently been reported by LinkedIn.

It is quite easy to expand data sets with Kafka because it scales better than other messaging systems. One of the reasons for it is that Kafka only guarantees order per partition. The way you scale Kafka is by adding more partitions; messages from each partition can then be processed in parallel.

Finally, software developers operate in ecosystems of services that together work towards some higher-level business goal. It’s beneficial to make these systems event-driven and Kafka is a great tool for that.

Website activity tracking

Website activity (page views, searches, or other actions users may take) is published to central topics and becomes available for real-time processing, dashboards, and offline analytics in data warehouses like Google’s BigQuery.

Metrics

Kafka is often used for operation monitoring data pipelines and enables alerting and reporting on operational metrics. It aggregates statistics from distributed applications and produces centralized feeds of operational data.

Log aggregation

Kafka can be used across an organization to collect logs from multiple services and make them available in a standard format to multiple consumers. It provides lower-latency processing and easier support for multiple data sources and distributed data consumption.

Stream processing

A framework such as Spark Streaming reads data from a topic, processes it, and writes processed data to a new topic where it becomes available for users and applications. Kafka’s strong durability is also very useful in the context of stream processing.

Kafka is used heavily in the big data space as a reliable way to ingest and move large amounts of data very quickly.

According to StackShare, there are 741 companies that use Kafka. Among them Uber, Netflix, Activision, Spotify, Slack, Pinterest, Coursera, and of course LinkedIn.

Kafka is a messaging system that lets you publish and subscribe to streams of messages. In this way, it is similar to products like ActiveMQ, RabbitMQ, IBM’s MQSeries, and other products. But it is not just a message broker.

In your organization, you might have various data pipelines that facilitate communication between various services. When plenty of services communicate with each other in real-time, their architecture becomes complex, because they need many integrations and various protocols. Apache Kafka can help you decouple such data pipelines and simplify software architecture to make it manageable.

Kafka is overkill when you need to process only a small number of messages per day (up to several thousand). Kafka is designed to cope with the high load. Use traditional message queues like RabbitMQ when you don’t have a lot of data.

Kafka is a great solution for delivering messages. But despite the fact that Kafka has a Stream API, it is not easy to perform data transformations on-fly. You need to build a complex pipeline of interactions between producers and consumers and then maintain the entire system. This requires a lot of work and effort. So, avoid using Kafka for ETL jobs, especially where real-time processing is needed.

When you need to use a simple task queue you should use appropriate instruments. Kafka is not designed to be a task queue. There are other tools that are better for such use cases, for example, RabbitMQ.

If you need a database, use a database, not Kafka. Kafka is not good for long-term storage. It supports saving data during a specified retention period, but generally, it should not be very long. Kafka also stores redundant copies of data, which can increase storage costs. Databases are optimized for storing fresh data. They have also versatile query languages and support efficient data inserting and retrieving. If relational databases are not what you need for your use case, try to look at non-relational (for example, MongoDB), but don’t use Kafka.

In this article, we described Apache Kafka and the most suitable use cases for deploying this tool. It should be easy to see why Kafka is such a powerful streaming platform. Kafka is a valuable tool in scenarios requiring real-time data processing and application activity tracking, as well as for monitoring purposes. At the same time, Kafka shouldn’t be used for data transformations on the fly, data storing, and when all you need is a simple task queue.

Need help with Apache Kafka? Our engineering expertise can help! Contact us.

High-performing teams are the distinctive feature of an industry-leading business. This can ensure the business's success in the long run. Working in a team...

Read More

Since the pandemic hit the world, the new normal is working from home. Gen Y and Gen Z are the largest workforce units of...

Read More

You have much to consider when looking for a solution for human resources (HR) technology. What is the right fit for you? How are...

Read More

As we have seen human resources and information technology have become more intertwined during the last decade. Manufacturing, financials, sales and marketing, and supply...

Read MoreSkizzle Technolabs India Pvt. Ltd.

Noel Focus, Kakkanad,

Kerala, India – 682021