Send Us a Message

Contact Information

Skizzle Technolabs India Pvt. Ltd.

Noel Focus, Kakkanad,

Kerala, India – 682021

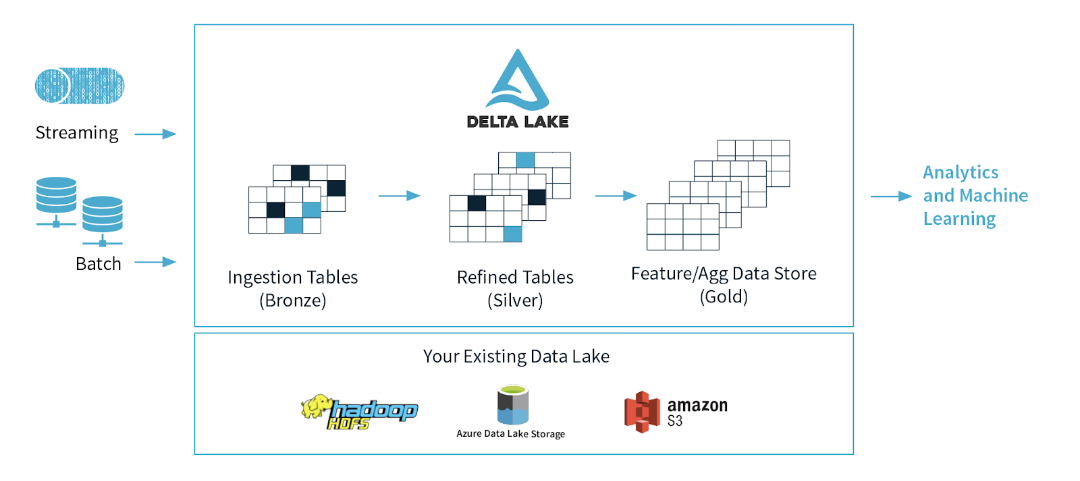

Delta Lake is an open-source transactional storage layer that runs on top of cloud or on-premise object storage, according to Databricks. By providing ACID transactions, data versioning, and rollback, Delta Lake promises to bring a layer of reliability to organizational data lakes.

This article introduces Azure Data Lake Storage and Databricks Delta Lake and how this open-source data storage layer from Apache Spark brings reliability and improves performance to data lakes.

Azure Storage is now the foundation for constructing enterprise data lakes on Azure thanks to Data Lake Storage Gen2. Data Lake Storage Gen2 was built from the ground up to handle many petabytes of data while maintaining hundreds of gigabits of throughput. It allows you to easily manage large amounts of data.

The inclusion of a hierarchical namespace to Blob storage is a key feature of Data Lake Storage Gen2. For efficient data access, the hierarchical namespace organizes objects/files into a hierarchy of folders. To emulate a hierarchical directory structure, a typical object storage naming practice employs slashes in the name. Renaming or removing a directory, for example, becomes a single atomic metadata operation on the directory.

Without first specifying a structure, all raw data from various sources can be saved in an Azure Data Lake. This differs from a data warehouse, where data must first be processed and formatted based on business requirements before being entered into the data warehouse. Data from a variety of sources can be stored in Azure Data Lake without having to be processed beforehand.

All forms of data from various sources will be stored in Azure Data Lake in a cost-effective, scalable, and easy-to-process manner.

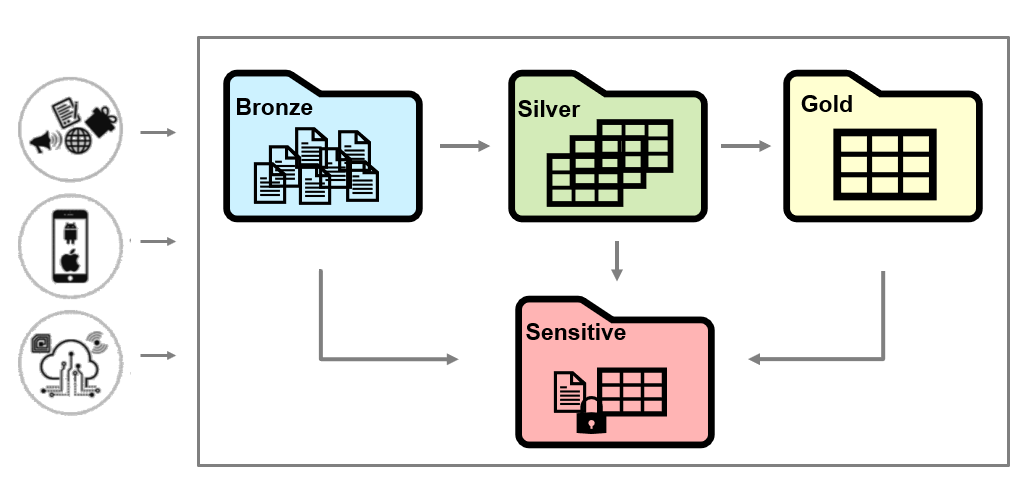

It is critical that the data is adequately organized across the data lake to avoid creating storage where all the data is simply poured in and then having problems accessing or even finding it afterward.

It’s a good idea to divide your data lakes into distinct zones:

Multiple data pipelines reading and writing data at the same time are common in Azure Data Lake. Because of the way big data pipelines work, maintaining data integrity is difficult (distributed writes that can be running for a long time). Delta lake is a new Spark functionality that was released to address this issue.

On top of an Azure Data Lake, Delta Lake is an open-source storage layer from Spark. Its key functions ensure data integrity with ACID transactions while also allowing reading and writing from/to the same directory/table, bringing reliability to massive data lakes. ACID is an acronym for Atomicity, Consistency, Isolation, and Durability.

Atomicity: Delta Lake ensures atomicity by keeping track of all fully finished operations in a transaction log; if an operation isn’t completed successfully, it isn’t logged. This attribute ensures that no data is written in parts, which can lead to data that is inconsistent or corrupted.

Consistency: Data is available for reading with serializable isolation of write, and the user can see consistent data.

Isolation: Delta Lake permits concurrent writes to tables, resulting in a delta table that looks the same as if all the writes were done sequentially (isolated).

Durability: When data is written directly to a disc, it is available even if the computer fails. Delta Lake satisfies the durability property as well.

The open-source Delta Lake has been integrated into Azure Databricks’ managed Databricks service, making it immediately available to its users.

Despite the benefits of data lakes, the rising volume of data kept in a single data lake poses a number of issues.

When a pipeline fails while writing to a data lake, the data is partially written or damaged, which has a significant impact on data quality.

Whereas, Delta is ACID compliant, which means we can guarantee that a write operation will either complete successfully or fail completely, preventing damaged data from being written.

Developers must design business logic for a streaming and batch pipeline independently, utilizing different technologies (e.g., Using Azure Data Factory for batch sources and Stream Analytics for stream sources). Furthermore, multiple jobs reading and writing from and to the same data are not possible.

With Delta, the same functions may be applied to batch and streaming data, ensuring that data is consistent in both sinks regardless of business logic changes. Delta also enables the reading of consistent data as fresh data is ingested via structured streaming.

The data that comes in can alter over time. This can lead to data type compatibility concerns, incorrect data entering your data lake, and so on in a Data Lake.

To avoid data corruption, Delta can restrict incoming data with a different schema from entering the table.

Users can easily update the format of the data to purposefully adapt to the data changing over time if enforcement isn’t required.

Because data in a Data Lake is continually changing, it would be impossible for a data scientist to repeat an experiment with the same conditions from a week ago unless the data was replicated many times.

Users can utilize Delta to revert back to a previous version of data for experiment replication, repairing incorrect updates/deletes or other transformations that resulted in faulty data, auditing data, and so on.

The structure of data changes over time as business concerns and requirements change. However, with the help of Delta Lake, adding new dimensions as the data changes are simple. Delta lakes improve the performance, reliability, and manageability of data lakes. Hence, use a secure and scalable cloud solution to improve the data lake’s quality.

We at Skizzle believe that Delta characteristics present a significant opportunity for anyone who is just getting started with a Data Lake or who currently has one. Delta is an easy-to-plug layer that can be plugged on top of an Azure Data Lake to provide true streaming analytics and large data handling while retaining all of the benefits of time travel, metadata handling, and ACID transactions.

If you have any additional questions about Delta or how to get started with your Data Lake, please contact us. Our Data experts will be happy to assist you.

High-performing teams are the distinctive feature of an industry-leading business. This can ensure the business's success in the long run. Working in a team...

Read More

Since the pandemic hit the world, the new normal is working from home. Gen Y and Gen Z are the largest workforce units of...

Read More

You have much to consider when looking for a solution for human resources (HR) technology. What is the right fit for you? How are...

Read More

As we have seen human resources and information technology have become more intertwined during the last decade. Manufacturing, financials, sales and marketing, and supply...

Read MoreSkizzle Technolabs India Pvt. Ltd.

Noel Focus, Kakkanad,

Kerala, India – 682021